Google Maps Unveils New Gemini-Powered AI Tools for Developers and Users

Key Points

- Builder Agent generates map prototypes from simple text prompts.

- Styling agent applies custom visual themes to maps for brand consistency.

- MCP server links AI assistants directly to Google Maps documentation.

- Grounding Lite lets external AI models use Maps data via Model Context Protocol.

- Contextual View provides low‑code visual answers like lists, maps, or 3D displays.

- Hands‑free Gemini navigation enables spoken, natural‑language directions.

- Region‑specific alerts add incident and speed‑limit information for drivers.

Google Maps announced a suite of AI-driven features powered by Gemini models, aimed at both developers and everyday users. The rollout includes a Builder Agent that generates map‑based prototypes from text prompts, a styling agent for custom map designs, and a Model Context Protocol (MCP) server that links AI assistants to Maps documentation. Additional tools such as Grounding Lite and Contextual View provide low‑code visual answers to location queries. The enhancements also extend to consumer experiences, with hands‑free Gemini navigation and region‑specific alerts in select markets.

AI‑Powered Enhancements to Google Maps

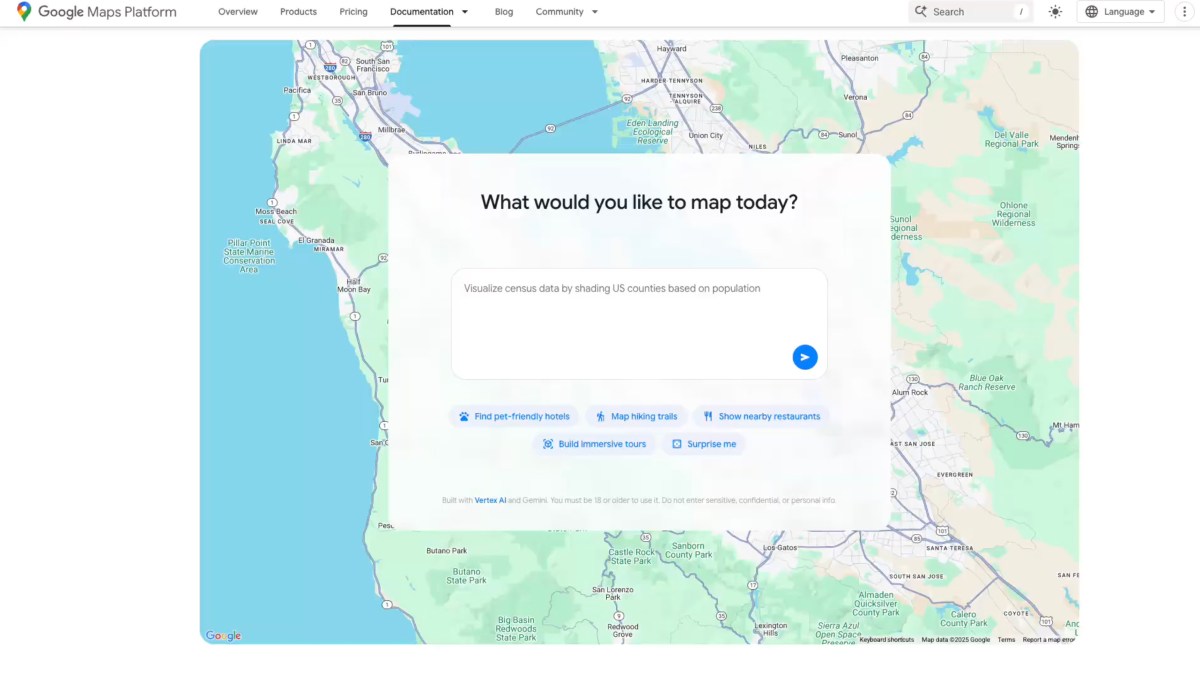

Google Maps is expanding its artificial‑intelligence capabilities by integrating Gemini models across a variety of new tools. The centerpiece of the launch is the Builder Agent, a coding assistant that transforms natural‑language descriptions into functional map‑based prototypes. Users can request tasks such as creating a Street View tour, visualizing real‑time weather, or listing pet‑friendly hotels, and the agent generates the underlying code, which can be exported, previewed with personal API keys, or edited further in Firebase Studio.

Complementing the Builder Agent is a styling agent that lets developers apply specific visual themes to maps, enabling brands to maintain consistent color schemes and design language across their location‑based services.

Connecting AI Assistants to Maps Documentation

The Model Context Protocol (MCP) server, referred to as a code‑assistant toolkit, establishes a direct link between AI assistants and Google Maps’ technical documentation. This connection allows developers to query the API and data usage guidelines in real time, streamlining the development process and reducing the need to search external resources.

Grounding Lite, another Gemini‑driven feature, enables developers to anchor their own AI models to Maps data via MCP. By grounding external models, AI assistants can answer location‑specific questions such as “How far is the nearest grocery store?” with accurate, context‑aware responses.

Low‑Code Visual Answers for Users

Contextual View is a low‑code component that presents visual answers to user queries. Depending on the request, the component can display a list, a map view, or a three‑dimensional representation, providing an intuitive way for users to understand distance, routing, or point‑of‑interest information.

Consumer‑Facing Features

Beyond developer tools, Google Maps is adding Gemini‑powered capabilities for everyday users. Hands‑free navigation now leverages Gemini to interpret spoken directions, offering a more natural interaction model. In select regions, the app includes incident alerts and speed‑limit data, enhancing safety and situational awareness for drivers.

These updates reflect Google’s broader strategy to embed generative AI throughout its ecosystem, making both creation and consumption of map‑related content more efficient and personalized.