Anthropic Launches Free Incognito Mode for Claude AI

Key Points

- Free incognito mode now available for all Claude users.

- Incognito chats are excluded from history and long‑term memory.

- Only a temporary 30‑day safety retention period is applied.

- Memory features remain limited to Team and Enterprise plans.

- Memory allows Claude to retain context, notes, and preferences across sessions.

- Incognito and memory functions operate independently, giving users clear choices.

- Anthropic highlights transparency with visible incognito icons and labels.

- The feature differentiates Claude from rivals like ChatGPT and Google Gemini.

Anthropic has introduced a free incognito mode for its Claude chatbot, allowing users on any subscription tier—including the free level—to conduct private, unsaved conversations. When activated, chats disappear from history and are not retained in the AI’s long-term memory, with only a temporary 30‑day safety retention. The feature complements Claude’s newer memory system, which remains limited to Team and Enterprise plans and enables the bot to recall context across sessions. By separating privacy and memory, Anthropic aims to give users clear control over what the AI remembers, positioning Claude against rivals like OpenAI’s ChatGPT and Google Gemini.

Incognito Mode Expands Privacy Options for All Users

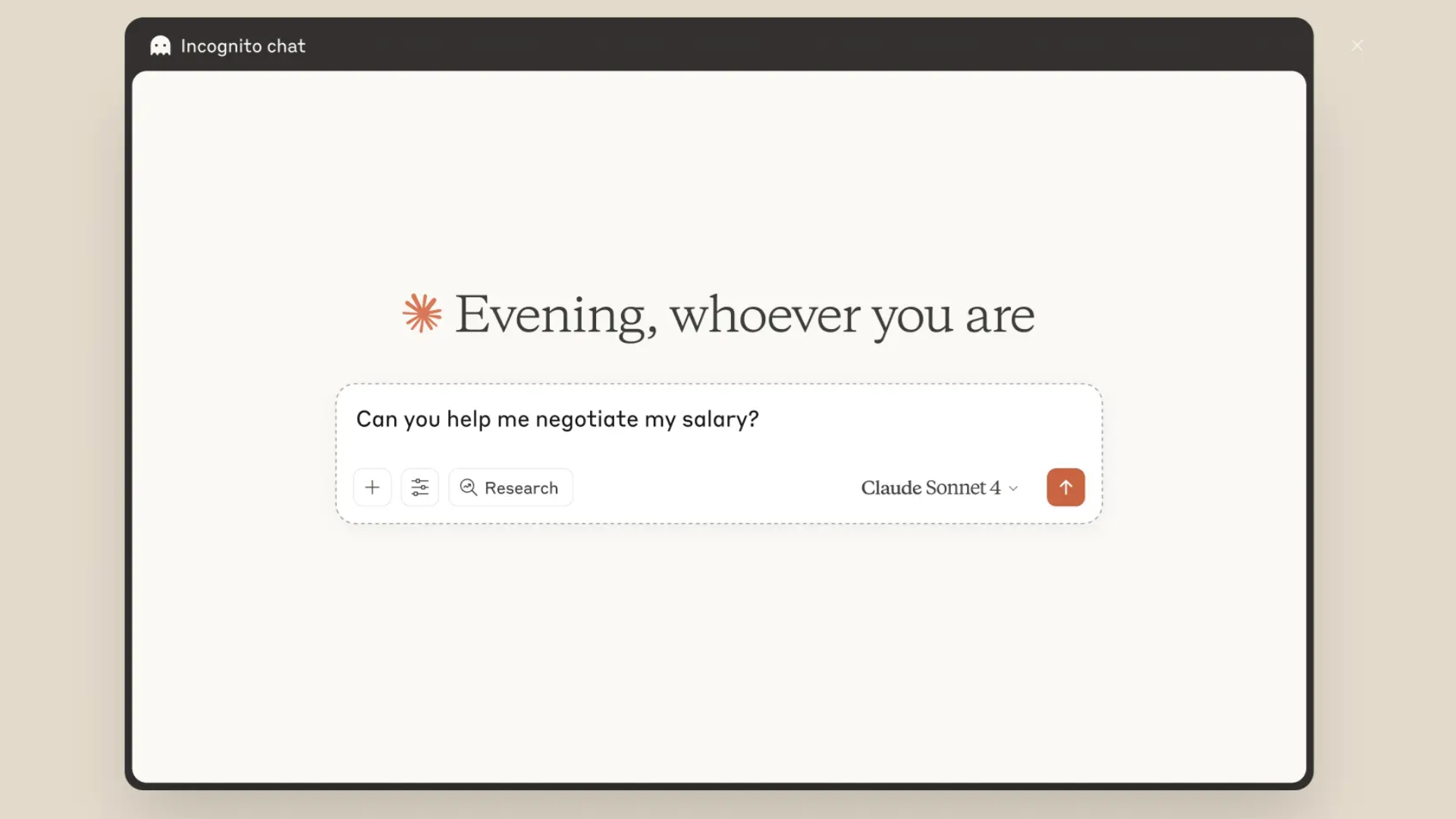

Anthropic has rolled out a new incognito mode for its Claude chatbot that is available to every user, regardless of subscription level. When a user clicks the ghost‑icon at the start of a chat, the conversation is flagged as incognito, indicated by a black border and label. In this mode, the dialogue is omitted from the user’s chat history and is not stored in Claude’s long‑term memory. The only retention is a temporary 30‑day period kept for safety purposes, after which the data is deleted.

Memory Features Remain Separate

Claude’s broader memory system, recently introduced for Team and Enterprise subscribers, allows the AI to retain context across multiple interactions, store project‑specific notes, and remember user preferences. This memory is isolated by project, ensuring that work‑related chats do not bleed into personal conversations. The incognito mode does not interfere with these memory capabilities; rather, it offers a clean‑slate alternative when users prefer no persistence.

Balancing Privacy and Continuity

The coexistence of incognito mode and memory features gives users granular control over their data. Users can choose incognito for exploratory or sensitive queries, knowing the conversation will vanish after the session. When continuity is desired—such as tracking a long‑term project—users can enable memory, allowing Claude to pick up where it left off. This dual approach mirrors familiar web‑browser privacy tools while extending them to conversational AI.

Competitive Landscape

Anthropic’s move highlights a growing emphasis on privacy in AI chat services. While OpenAI’s ChatGPT and Google’s Gemini also provide private‑chat options and memory functions, Anthropic distinguishes itself by offering incognito mode for free across all tiers and by clearly labeling the privacy status of each conversation. This transparency aims to lower the barrier for users curious about AI but wary of data trails.

Implications for Users

Incognito mode encourages experimentation, enabling users to ask unconventional or personal questions without fear of the AI retaining that information. It also serves professionals who need a sandbox for brainstorming without cluttering long‑term project memory. By providing both privacy and memory as separate, optional tools, Anthropic positions Claude as a flexible assistant adaptable to a wide range of use cases.