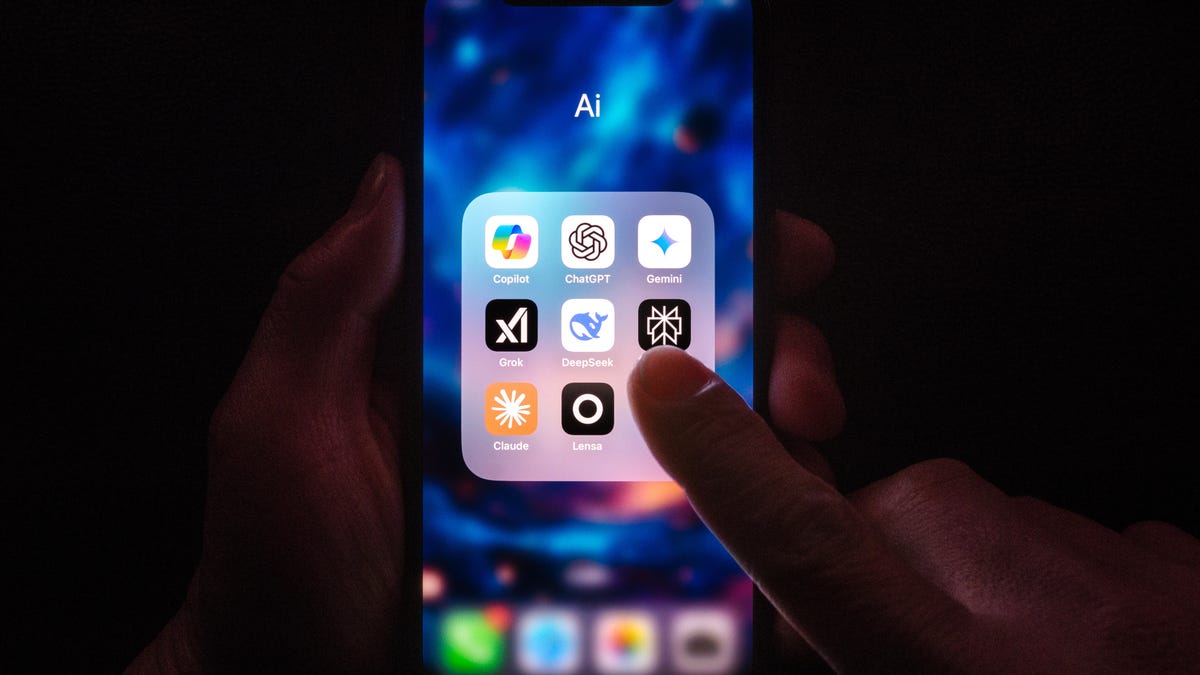

11 Situations Where Using ChatGPT Can Backfire

Key Points

- ChatGPT can help organize health information but cannot replace a medical exam.

- It offers mental‑health tips but lacks professional licensing and empathy.

- In emergencies, use real‑world safety measures, not chatbot advice.

- Financial and tax guidance should come from qualified accountants.

- Never input confidential or regulated data into the model.

- Using the AI for cheating or illegal activities is prohibited.

- For real‑time news, rely on live feeds rather than the chatbot.

- Gambling predictions from ChatGPT are unreliable.

- Legal documents need attorney review; the AI is not a substitute.

- Art created by AI should not be claimed as original human work.

ChatGPT excels at drafting questions, translating jargon, and offering basic explanations, but it falls short when asked to diagnose health conditions, provide mental‑health support, make emergency safety decisions, handle personalized finance or tax planning, process confidential data, or create legally binding documents. The model also cannot be trusted for cheating‑related tasks, real‑time news monitoring, gambling advice, or original artistic creation. Users are urged to treat the AI as a supplemental tool rather than a replacement for professionals in these high‑risk areas.

Health Diagnosis Is Not a ChatGPT Specialty

While the model can help users organize symptom timelines and translate medical terminology, it cannot examine patients or order labs. Relying on its suggestions for diagnoses can lead to serious misinterpretations, ranging from minor ailments to severe conditions such as cancer.

Mental‑Health Support Requires Human Empathy

ChatGPT can share grounding techniques, yet it lacks lived experience, body‑language cues, and professional licensing. In crises, users should contact qualified therapists or emergency hotlines rather than depend on the AI.

Emergency Safety Decisions Must Be Immediate

The system cannot detect hazards like carbon‑monoxide or fire, nor can it call emergency services. In urgent situations, users should evacuate and dial 911 before consulting any chatbot.

Personal Finance and Tax Planning Are Too Complex

ChatGPT can explain concepts such as ETFs, but it does not have access to an individual’s income, deductions, or current tax rates. Professional accountants remain essential for accurate, personalized advice.

Confidential and Regulated Data Should Not Be Shared

Inputting embargoed press releases, medical records, or any data protected by privacy laws risks exposure, as the AI’s processing occurs on external servers. Users must treat the model like any public platform and avoid uploading sensitive information.

Illicit Activities Are Outside the Model’s Scope

Any request to facilitate illegal behavior is explicitly discouraged.

Academic Integrity Is Compromised by AI‑Generated Work

While ChatGPT can serve as a study aid, using it to produce essays or exam answers constitutes cheating and can lead to disciplinary actions.

Real‑Time News and Data Require Dedicated Sources

Although the model can fetch fresh web pages, it does not continuously stream updates. For time‑critical information, users should rely on live feeds and official releases.

Gambling Advice Is Unreliable

The AI may hallucinate player statistics or injury reports, making it unsuitable for betting decisions.

Legal Documents Demand Professional Drafting

ChatGPT can outline basic legal concepts, but drafting contracts, wills, or trusts without attorney oversight risks non‑compliance with state‑specific laws.

Artistic Creation Should Remain Human‑Driven

While the tool can brainstorm ideas, producing original art to pass off as personal work is discouraged.

Overall, the recommendation is to treat ChatGPT as an auxiliary resource for low‑risk tasks and to consult qualified professionals for any high‑stakes decisions.