OpenAI's Sora Video Generator Misses the Mark in IVF Explainer Test

Key Points

- Reporter used Sora to generate IVF‑related video clips for an explainer.

- Most outputs contained scientific inaccuracies and misspelled text.

- Visual errors included extra limbs, malformed anatomy, and unrealistic fluids.

- A few newborn clips approached realism after extensive prompt tweaking.

- The test highlights current limitations of AI video for specialized medical topics.

- Creators should anticipate significant editing or alternative footage sources.

- Future improvements may make Sora more viable for precise visual storytelling.

A reporter undergoing IVF tested OpenAI's Sora AI video generator to create footage for an explainer on the fertility industry. While the tool produced a handful of usable clips, most outputs contained glaring scientific inaccuracies, nonsensical text, and visual errors such as misplaced anatomy and extra limbs. The experiment highlights current limitations of AI‑generated video for specialized medical storytelling and suggests that creators should approach Sora with caution until its capabilities improve.

Background and Motivation

A journalist currently navigating in‑vitro fertilization (IVF) sought to leverage OpenAI's Sora, an AI‑driven video generation system, to produce realistic B‑roll for an upcoming explainer on the fertility industry. The goal was to reduce the challenges of on‑camera production and to obtain visual elements that would complement personal commentary.

Testing Process

The reporter accessed Sora directly through its chat interface and entered prompts describing specific IVF‑related scenes, such as embryo development, female reproductive anatomy, and medication setups. Multiple prompts were iterated, with adjustments made to improve visual fidelity and to correct textual errors.

Key Findings

Most generated clips displayed significant shortcomings. Scientific details were often inaccurate—embryo dishes showed misplaced objects, and anatomical diagrams contained misspelled terminology. Text overlays featured nonsensical words and garbled phrases, clearly indicating AI‑generated content. Visual anomalies included extra fingers, malformed limbs, and unrealistic fluid representations in medical equipment.

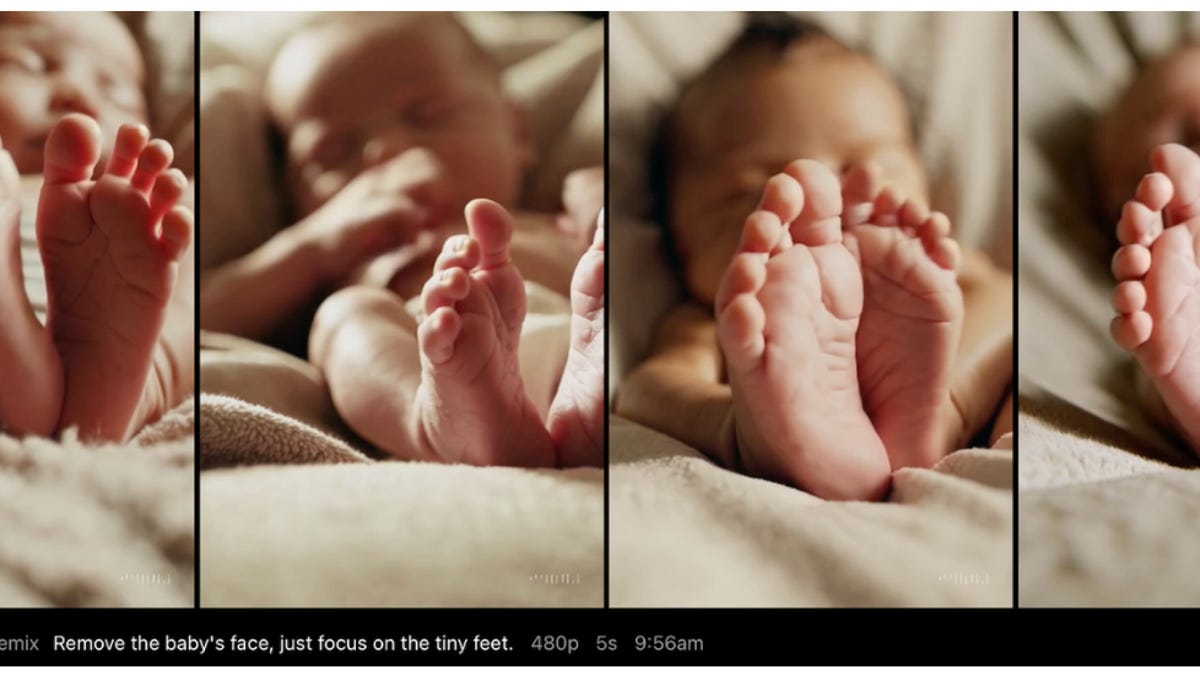

Some clips, such as a close‑up of a newborn baby, approached realism and were deemed passable. However, even these succeeded only after extensive prompt refinement and still exhibited occasional errors, like incorrect toe counts.

Implications for Content Creators

The experiment underscores that while Sora can produce basic visual material, it struggles with domain‑specific accuracy and nuanced visual details required for medical storytelling. Creators aiming for high‑stakes topics, especially those involving precise scientific imagery, should anticipate the need for extensive post‑production editing or supplemental sourcing of stock footage.

Conclusion

OpenAI's Sora shows promise in democratizing video creation, yet its current limitations make it unsuitable for detailed, accurate representations of complex medical procedures. The reporter plans to revisit the tool once newer versions become available, but advises caution for those seeking reliable, error‑free visual content in specialized fields.