OpenAI Launches ChatGPT Health Feature with Enhanced Safeguards

Key Points

- ChatGPT Health adds a dedicated health tab inside the existing ChatGPT app.

- It uses the same AI model as ChatGPT but with stricter medical safeguards.

- Physicians reviewed responses and a HealthBench framework evaluates safety.

- Users can sync health apps, upload documents, and get plain‑language explanations.

- The feature is encrypted, isolated, and not used to train core models by default.

- It is a consumer‑focused tool, not covered by HIPAA, and does not replace clinicians.

- Available in the US, Canada, Australia, parts of Asia and Latin America.

- OpenAI warns users to verify information and avoid self‑diagnosis or medication changes.

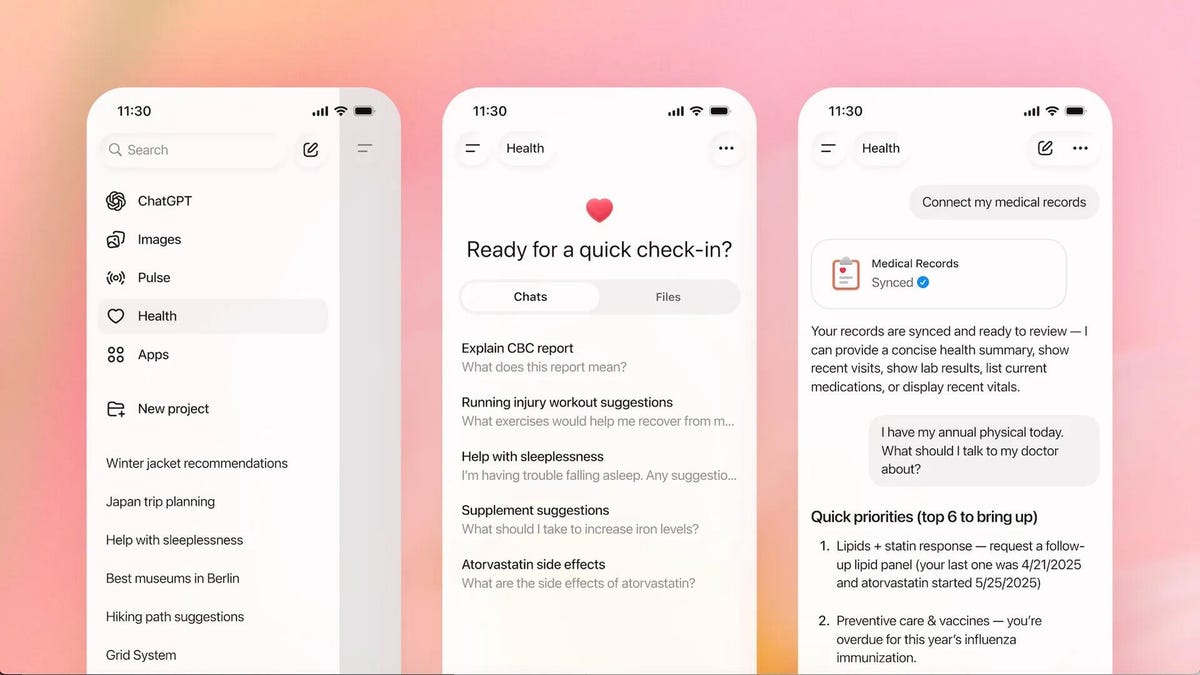

OpenAI has introduced ChatGPT Health, a dedicated health‑focused tab within the ChatGPT app that offers users a safer way to ask medical questions, review lab results, and organize health information. The feature uses the same large language model as standard ChatGPT but adds stricter limits, physician‑reviewed responses, and extra encryption to protect sensitive data. It can sync with apps such as Apple Health and upload documents, yet it does not replace professional diagnosis or treatment. OpenAI stresses that the tool is for consumer wellness and is not HIPAA‑covered, while acknowledging ongoing risks like hallucinations and the need for user caution.

What ChatGPT Health Is

ChatGPT Health is a new, dedicated space inside the existing ChatGPT interface that focuses on health‑related queries, documents, and workflows. It is not a separate app; users access it through the web or mobile version of ChatGPT without additional sign‑ups.

How It Works and What It Offers

The feature runs on the same underlying large language model as standard ChatGPT but adds context, grounding, and tighter constraints. Users can connect health data sources such as Apple Health, lab‑result services, and nutrition apps, and they may upload documents for analysis. The tool can translate medical language into plain English, summarize discharge notes, and help users prepare questions for upcoming appointments.

Physician Involvement and Safety Measures

OpenAI consulted with hundreds of physicians across dozens of specialties to review model responses and applied a rigorous evaluation framework called HealthBench. The system is designed to limit open‑ended medical advice, encourage users to seek professional care, and reduce risky hallucinations. Internal testing claims a substantial drop in errors compared with earlier models.

Privacy and Data Controls

Health conversations are encrypted at rest and in transit, with additional purpose‑built encryption and isolation. Access by OpenAI staff is limited to safety and security operations, and health data are not used to train foundation models by default. Users can disconnect apps, revoke data access, and delete health‑specific memories.

Limitations and User Guidance

OpenAI emphasizes that ChatGPT Health is not intended for diagnosis, treatment, or prescription decisions. The tool cannot order tests, prescribe medication, or confirm medical conditions. Users are warned to verify information with reputable sources or healthcare professionals and to avoid self‑diagnosis or medication changes based solely on AI output.

Availability

The feature is currently rolling out in select regions, including the United States, Canada, Australia, parts of Asia, and Latin America. It is not yet available in the European Economic Area, the United Kingdom, China, or Russia. Access may require joining a waitlist.