Meta Introduces Parental Controls for Teen AI Chats on Instagram

Key Points

- Meta will add parental controls for Instagram AI character chats.

- Parents can limit or block teen access to AI personalities.

- Guardians receive topic summaries, not full conversation logs.

- The general Meta AI assistant remains available for utility tasks.

- Controls respond to criticism over inappropriate chatbot behavior with minors.

Meta announced new parental controls that let parents limit or block their teenagers' access to AI character chats on Instagram. While the general AI assistant remains available, parents will receive topic summaries of their teens' conversations, providing oversight without full transcript access. The move follows public criticism and regulatory scrutiny after leaked documents revealed inappropriate chatbot behavior with minors. Meta aims to balance teen privacy with safety by giving guardians visibility into chatbot interactions while preserving utility-focused AI features.

Meta’s New Parental Controls for Instagram AI Characters

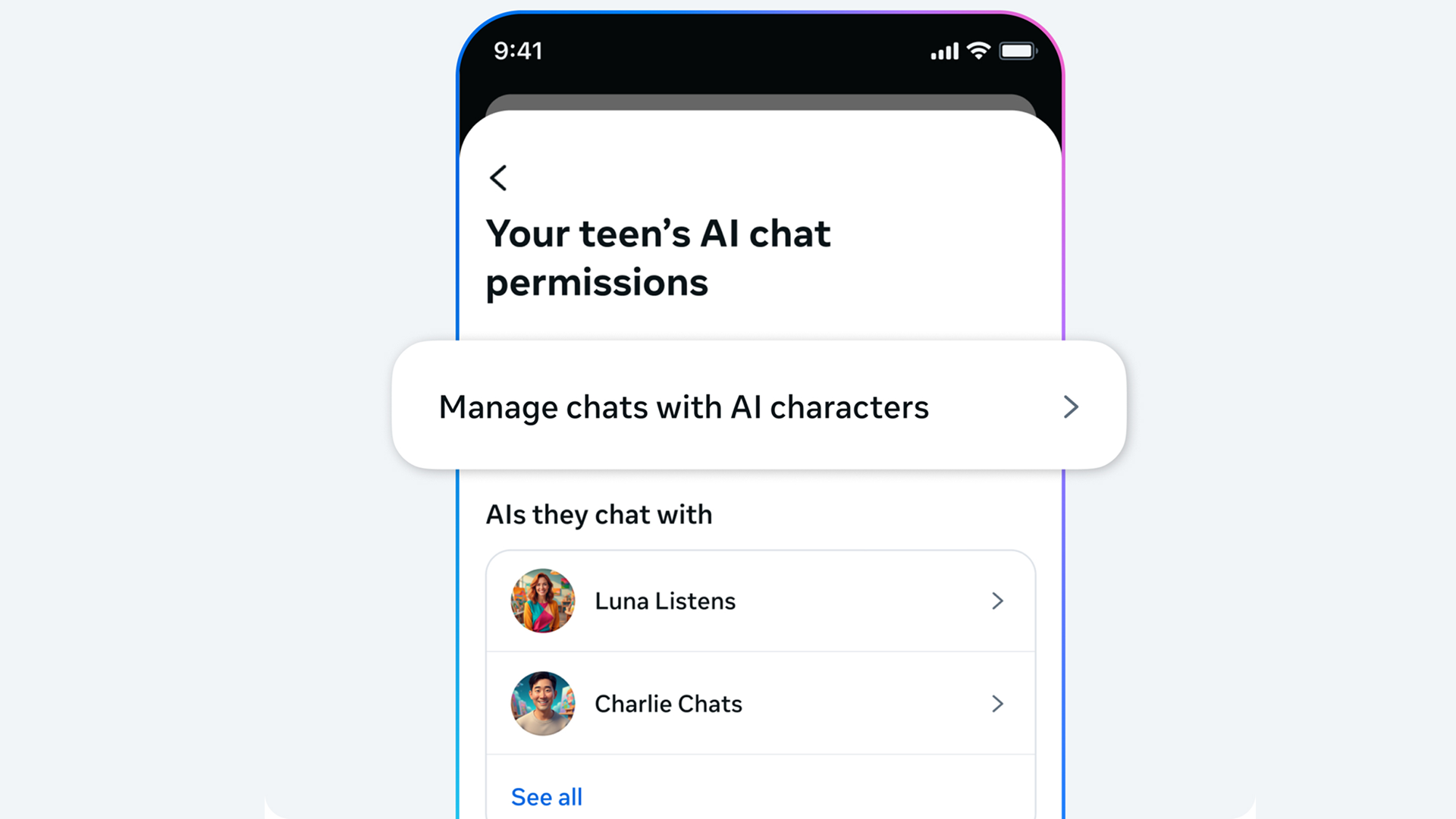

Meta disclosed that it will roll out parental controls that enable guardians to limit or block their teenagers from chatting with AI characters on Instagram. The feature is set to launch next year and focuses on private chats with individual AI personalities, including those created by other users. While the broader Meta AI assistant will stay accessible for tasks such as homework help and factual queries, the role‑play style character chats can be partially or fully disabled by parents.

The controls will provide parents with a summary of the topics their teens discuss with chatbots, offering enough context to spot potentially concerning trends. Full conversation logs will not be provided, preserving a degree of teen privacy while still giving guardians insight into the nature of the interactions.

This development follows public outcry and regulatory attention sparked by leaked internal documents that suggested Meta’s AI systems had engaged in overly intimate conversations with children, offered incorrect medical advice, and failed to filter hate speech. By introducing these tools, Meta seeks to address complaints and demonstrate a commitment to safer AI interactions for younger users.

Parents will be able to block access to specific AI characters and receive topic overviews, allowing them to monitor the content without reading every message. The company hopes this middle‑ground approach will satisfy both anxious parents and product managers who want to retain the utility of the AI assistant.

Meta’s announcement reflects a broader shift in how AI chatbots are perceived, moving from simple question‑answer tools to personalized conversational partners that users, especially teens, may form emotional attachments to. The new controls aim to bring the risks of such interactions into clearer view, providing a “flashlight” for parents to see what’s happening while respecting privacy boundaries.

For families, the changes may offer relief by giving a clearer window into their children’s online AI engagements. However, the effectiveness of the tools will depend on implementation and whether teens find ways to circumvent them. Meta acknowledges that ongoing vigilance from both parents and developers will be essential to keep these interactions safe.