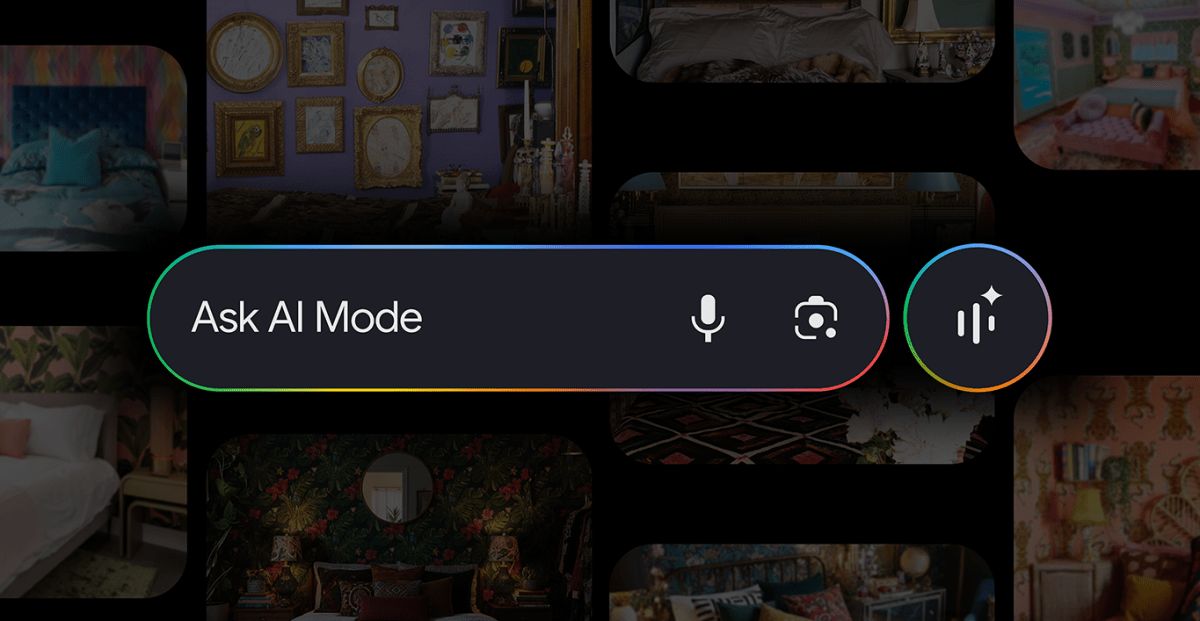

Google’s AI Mode Brings Conversational Image Search to Users

Key Points

- Google AI Mode now supports natural‑language image searches.

- Users can describe items like “barrel jeans that aren’t too baggy” and refine results conversationally.

- Search can start with an uploaded photo or a snap, combined with text descriptors.

- Results include shoppable options, linking directly to retailer sites.

- Powered by Gemini 2.5, the update leverages advanced multimodal AI for nuanced understanding.

- Available in English to U.S. users, with rollout taking a few days to reach all accounts.

Google has rolled out an update to its AI Mode that lets users search for images using natural, conversational language. The new feature allows shoppers and browsers to describe what they want—like they would to a friend—and receive visual results that can be refined on the fly. Users can also start a search by uploading a reference photo or taking a picture, blending visual cues with text to hone results. The update, powered by Gemini 2.5 and the latest multimodal capabilities, is currently available in English to U.S. users.

Conversational Search Redefined

Google’s latest AI Mode update transforms image search from a series of filters into a fluid, conversational experience. Rather than selecting options for color, size, or brand, users can simply describe their desired items in everyday language. For example, a shopper can ask for “barrel jeans that aren’t too baggy” and then follow up with requests such as “more ankle length” or “show me acid‑washed denim.” The system interprets these natural‑language prompts and delivers a curated set of visual results that match the described vibe.

Visual and Textual Fusion

The new capability also supports a hybrid approach. Users may upload a reference image or snap a photo, then complement it with textual descriptors to fine‑tune the results. This blend of visual and linguistic inputs lets the AI recognize subtle details, secondary objects, and nuanced context within images, producing more accurate and relevant matches.

Shopping Integration

Beyond inspiration, AI Mode’s conversational search is tightly linked to shopping. The generated results include shoppable options, allowing users to click through to retailers directly from the search interface. Google highlights that the system intelligently curates a set of purchasable items based on the user’s description, streamlining the path from discovery to purchase.

Technical Foundations

The upgrade builds on Google Search with Lens, traditional Image Search, and the advanced multimodal language model Gemini 2.5. These technologies enable the AI to parse both visual cues and natural language, delivering richer, more nuanced search outcomes. The system can understand secondary objects and subtle visual cues, which enhances its ability to match the user’s intent.

Rollout Details

The conversational image search feature is rolling out in English to users in the United States. Google notes that it may take a few days for the new capabilities to appear for all users. As the update is gradually activated, users can expect to see richer visual results and the ability to refine searches in a manner that feels natural, akin to speaking with a friend.

Implications for Users

This shift toward conversational interaction lowers the barrier for discovering products and visual content. Users no longer need to know exact keywords or navigate complex filter menus; instead, they can rely on everyday language to guide the search. The integration of image upload further expands possibilities, making it easier to find visually similar items or explore design ideas without precise terminology.