Google Maps Integrates Gemini AI for Enhanced Navigation and Hands‑Free Queries

Key Points

- Google Maps now includes Gemini AI for voice‑based, conversational queries while driving.

- Drivers can ask about restaurants, parking, traffic, and even add calendar events without touching the phone.

- Navigation cues now reference visible landmarks identified from Street View imagery.

- Gemini cross‑references 250 million places with Street View to select prominent landmarks.

- Google Lens integration lets users point at a location and ask Gemini to explain it.

- The rollout begins on iOS and Android devices, with Android Auto support forthcoming.

- Traffic alerts launch first for Android users in the United States.

- Landmark‑based navigation will be available on both iOS and Android in the United States.

Google has upgraded Maps with its Gemini artificial‑intelligence model, letting users ask conversational questions while driving, receive landmark‑based turn directions, and use Lens to identify places. The new features let drivers request restaurant recommendations, traffic updates, and even add calendar events without touching their phones. Gemini cross‑references millions of places with Street View imagery to highlight visible landmarks in navigation cues. The rollout will begin on iOS and Android devices, with Android Auto support slated for the near future.

AI‑Powered Enhancements Over the Past Year

Over the last year, Google has layered multiple artificial‑intelligence features into Maps to improve discovery and enable users to ask questions about places. These upgrades set the stage for the latest integration of Gemini, Google’s advanced AI model, which expands the app’s conversational capabilities.

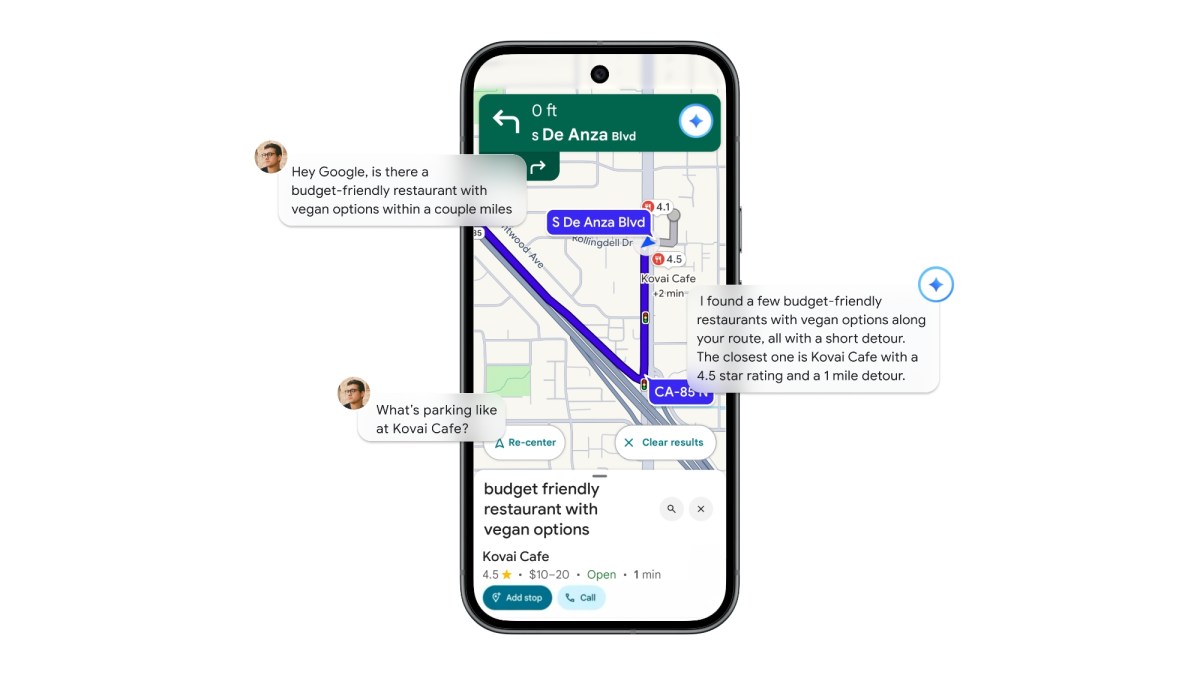

Gemini Enables Conversational Queries While Driving

With Gemini, drivers can now speak naturally to Maps and receive answers about points of interest along their route. Users can ask for budget‑friendly vegan restaurants within a few miles, inquire about parking conditions, or request real‑time sports and news updates—all without leaving the road. The system supports multi‑question conversations, allowing follow‑up queries such as, “What’s parking like there?”

Landmark‑Based Turn Instructions

Google is also combining Gemini with Street View data to make navigation cues more intuitive. Instead of generic distance‑based prompts, Maps now mentions nearby landmarks—like gas stations, restaurants, or famous buildings—when directing drivers to turn. Gemini cross‑references information about 250 million places with Street View images to identify visible landmarks that appear on the road, helping users recognize turns more easily.

Google Lens Integration

Maps now works together with Google Lens, allowing users to point their camera at a location and ask Gemini, “What is this place and why is it popular?” The AI can answer questions about surroundings, blending visual recognition with its extensive knowledge base.

Rollout Plans

Google said the Gemini navigation features will be rolled out to iOS and Android devices in the coming weeks, with Android Auto support arriving soon after. Traffic alerts are initially launching for Android users in the United States, while landmark navigation will be available on both iOS and Android in the United States. Lens with Gemini will become functional in the United States later this month.