Google Maps Integrates Gemini AI for Conversational Hands‑Free Navigation

Key Points

- Gemini AI replaces Assistant in Google Maps for conversational navigation.

- Users can ask for budget‑friendly restaurants, parking info, and add calendar events without leaving the app.

- Landmark‑based directions replace generic distance cues, e.g., "turn left after the Thai Siam Restaurant."

- Proactive traffic alerts are delivered even when users are not actively navigating.

- Lens integration allows on‑the‑spot queries about nearby places via the camera.

- Rollout begins on Android and iOS, with future support for Android Auto.

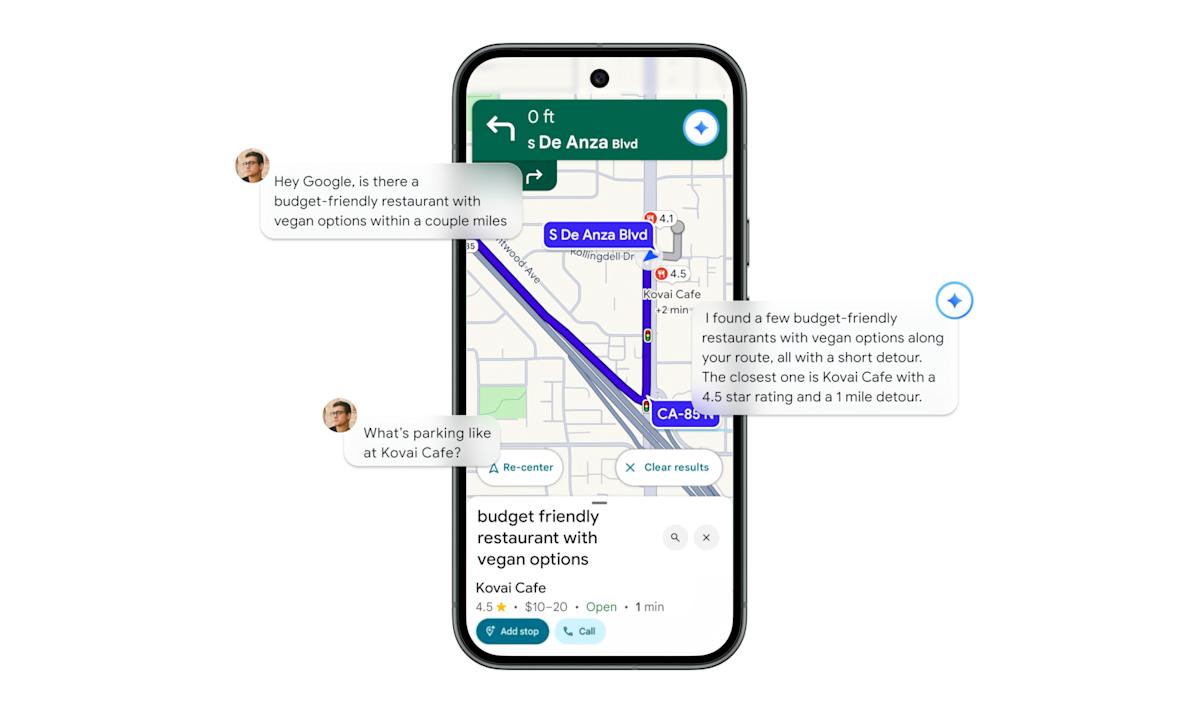

Google is adding its Gemini AI assistant to Google Maps, enabling users to interact with the app through natural conversation while navigating. The upgrade lets drivers ask for budget‑friendly restaurants along a route, request parking information, and add calendar events without leaving the map. Gemini also provides landmark‑based directions, proactive traffic alerts, and integrates with Lens for on‑the‑spot queries about nearby places. The rollout begins on Android and iOS devices and will later extend to Android Auto.

Gemini Integration Overview

Google is replacing the traditional Assistant with its Gemini AI across all of its apps, and Maps is the latest to receive the upgrade. The new AI assistant allows users to converse with the app while navigating, keeping their hands free and eyes on the road. The feature is being rolled out over the next few weeks to Android and iOS devices in all regions where Gemini is already available, with future support planned for Android Auto.

Conversational Navigation Features

Gemini lets drivers ask natural‑language questions such as, “Is there a budget‑friendly Japanese restaurant along my route within a couple of miles?” After receiving an answer, users can follow up with additional queries—checking parking availability, popular dishes, or other details—without exiting the Maps interface. Once a decision is made, a simple command like, “Okay, let’s go there,” initiates turn‑by‑turn navigation.

The assistant also handles auxiliary tasks while driving. Users can request calendar events to be added, provided they grant the necessary permissions, and can report road conditions by saying, “there’s flooding ahead” or “I see an accident.” These inputs help keep the map data current and improve community‑based reporting.

Landmark Directions and Proactive Alerts

In the United States, Gemini‑enhanced directions now reference easily recognizable landmarks. Instead of generic cues like “turn left in 500 feet,” the AI might say, “turn left after the Thai Siam Restaurant,” and highlight that landmark visually on the map. This approach aims to make navigation more intuitive.

Gemini also proactively notifies users of road disruptions even when they are not actively navigating. Android users will receive these alerts automatically, helping drivers stay informed about traffic conditions before they start a trip.

Lens Integration and Future Rollout

Later this month, Lens will be integrated with Gemini inside the Maps app. By tapping the camera icon in the search bar and pointing it at a nearby establishment, users can ask questions such as, “What is this place and why is it popular?” Gemini will provide contextual answers, merging visual recognition with conversational AI.

The combined capabilities of Gemini, conversational navigation, landmark‑based directions, proactive alerts, and Lens integration represent a significant step toward a more interactive and hands‑free driving experience. Google plans to expand these features to Android Auto, further extending the reach of AI‑driven navigation across vehicle infotainment systems.