Google Gemini 3 Pro Delivers Mixed Performance in Real‑World Tests

Key Points

- Gemini 3 Pro offers upgraded reasoning and concise responses.

- Canvas workspace can combine text, images, and video to generate 3D visualizations.

- Generative UI creates magazine‑style visual pages for topics like travel itineraries.

- Gemini Agent can organize Gmail, set reminders, and attempt reservations.

- Visual outputs show uneven quality; some models lack detail.

- Agentic tasks sometimes misstate costs and require multiple confirmations.

- Compared with Perplexity and ChatGPT, Gemini has deeper Gmail integration but slower email sending.

- Overall experience is mixed, with strong multimodal features but inconsistent task execution.

Google’s Gemini 3 Pro model introduces upgraded reasoning, visual generation, and agentic capabilities, but hands‑on testing shows results that fall short of the company’s demos. The new Canvas workspace can combine text, images, and video to create interactive 3D visualizations, while the generative UI offers magazine‑style layouts for travel itineraries and other topics. Gemini Agent can organize Gmail, set reminders, and attempt reservations, yet it occasionally misstates costs and requires multiple confirmations. Compared with other AI assistants, Gemini excels at Gmail integration but lags in speed and consistency, delivering a mixed overall experience.

New Features and Promises

Google launched the Gemini 3 family, with Gemini 3 Pro as the first model available to users. The company markets the model as having stronger reasoning abilities, more concise responses, and advanced multimodal capabilities. Within the Gemini app, the Canvas workspace lets users feed text, images, and video together, prompting the AI to generate interactive 3D visualizations, richer user interfaces, and simulations. Google also promotes a generative UI that presents answers in visual, magazine‑style pages, and an "agentic" feature called Gemini Agent that can act on behalf of users for tasks such as email organization, reminder creation, and reservation booking.

Hands‑On Evaluation of Visual Generation

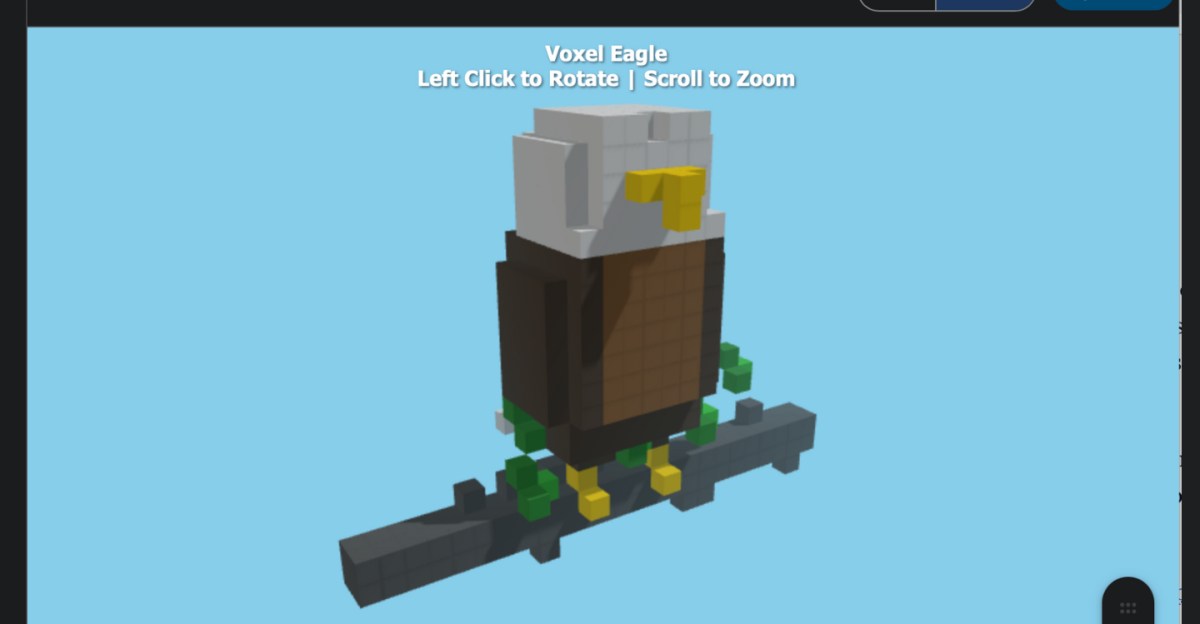

Testing the 3D visualization claims revealed that Gemini 3 Pro can produce interactive models that roughly follow the demo content. When asked to illustrate a scale comparison from subatomic particles to galaxies, the model generated a scrollable visual that listed the items in order. However, the image quality was uneven; the DNA strand and beach ball appeared dimmer than in Google’s showcase. Simpler prompts, such as creating a voxel‑style eagle, resulted in models missing details like eyes and proper tree trunks, while other animal models appeared primitive with little detail.

Generative UI and Interactive Layouts

The generative UI feature produced a personalized travel page for a three‑day trip to Rome. The layout included itinerary options, filters for pace and dining preferences, and the ability to redesign the page based on user selections. Similar interactive guides were demonstrated for topics like building a computer or setting up an aquarium, indicating the potential for visual, customizable content beyond plain text answers.

Gemini Agent’s Task‑Handling Abilities

Gemini Agent was evaluated for Gmail organization and reminder setting. When instructed to sort the inbox, the agent identified recent unread emails, displayed them in a chart, and offered buttons to archive promotional messages. It successfully created a reminder for a bill payment and placed it in Google Tasks with the correct due date. The agent also attempted to navigate a billing interface to pay the bill, but stopped before entering payment details due to security concerns.

Comparison with Other AI Assistants

In direct comparison, Gemini’s Gmail integration proved deeper than that of Perplexity and ChatGPT. While Perplexity could list emails, it required manual commands for actions, and ChatGPT operated in a read‑only mode despite being able to send emails. However, Gemini was slower at sending emails than Perplexity. When trying to book a restaurant reservation, Gemini reported a nonexistent cost, backpedaled by attributing it to the restaurant’s service charge, and asked for confirmation multiple times, highlighting inconsistencies in task execution.

Overall Assessment

The testing shows that Gemini 3 Pro delivers impressive visual and agentic features that align with Google’s promotional claims in broad strokes, yet the execution often falls short in detail, speed, and reliability. The model’s strengths lie in multimodal generation and deep Gmail integration, while its weaknesses include uneven visual quality, occasional misinformation about costs, and slower performance on routine tasks. Users may find the text‑based answers sufficient for everyday queries, reserving the advanced visual and agentic tools for occasional specialized needs.