FTC Launches Probe into AI Companion Chatbot Companies

Key Points

- FTC opens formal inquiry into AI companion chatbots.

- Seven companies—including Alphabet, Character.AI, Meta, OpenAI, Snap and X.AI—are asked to provide detailed information.

- Investigation focuses on measuring, testing and monitoring impacts on children and teens.

- Commission seeks data‑privacy practices and compliance with COPPA.

- Commissioner Mark Meador cited reports of chatbots amplifying suicidal ideation and sexual content with minors.

- Texas Attorney General launches separate probe into Character.AI and Meta AI Studio over privacy and mental‑health claims.

- Regulators emphasize immediate privacy and health risks of AI chatbots.

The Federal Trade Commission has opened a formal inquiry into several major developers of AI companion chatbots. The investigation, which is not yet linked to any regulatory action, seeks to understand how these firms measure, test and monitor potential negative impacts on children and teens, as well as how they handle data privacy and compliance with the Children’s Online Privacy Protection Act. Seven companies—including Alphabet, Character Technologies, Meta, OpenAI, Snap and X.AI—have been asked to provide detailed information about their AI character development, monetization practices and safeguards for underage users.

FTC Initiates Inquiry into AI Companion Chatbots

The Federal Trade Commission (FTC) has begun a formal investigation into companies that create artificial‑intelligence chatbots capable of acting as companions. While the probe does not yet signal any specific regulatory action, the commission aims to uncover how these firms about to answer questions regarding the measurement, testing, and monitoring of potentially negative impacts on children and teens.

Companies Requested to Participate

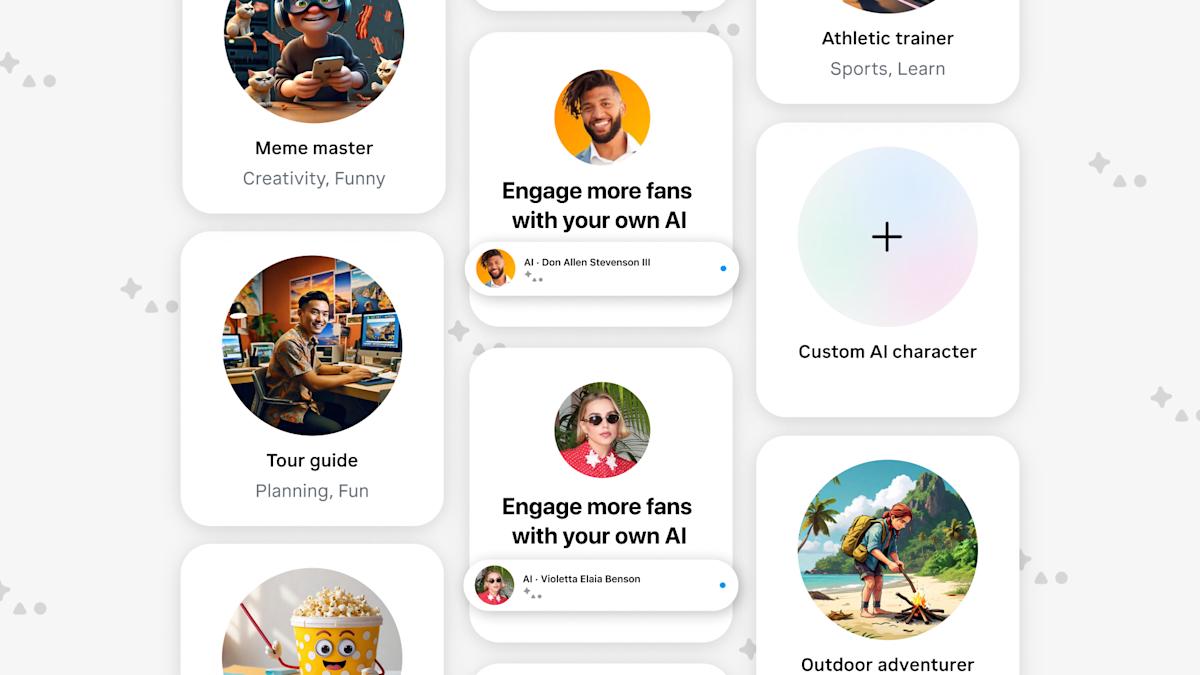

Seven firms have been asked to take part in the FTC’s inquiry. These include Alphabet, the parent company of Google; Character Technologies, the creator of Character.AI; Meta and its subsidiary Instagram; OpenAI; Snap; and X.AI. The commission is seeking a variety of information from each, covering how they develop and approve AI characters, how they monetize user engagement, and the data‑handling practices they employ to protect underage users.

Focus on Child Safety and Data Privacy

The FTC’s line of questioning emphasizes compliance with the Children’s Online Privacy Protection Act (COPPA). Investigators want to determine whether chatbot makers are adequately safeguarding the privacy of minors and whether they are adhering to legal standards concerning data collection and usage. The agency also wants insight into how these companies protect children from potentially harmful content or interactions.

Motivation Behind the Investigation

Although the FTC has not provided a clear rationale for the investigation, Commissioner Mark Meador referenced recent reporting by major newspapers that highlighted concerns such as chatbots and suicidal ideation and sexually‑themed discussions involving underage users. Meador suggested that if the facts uncovered through the inquiry indicate violations of the law, the commission should act promptly to protect vulnerable individuals.

Parallel State‑Level Scrutiny

In addition to the federal probe, the Texas Attorney General has launched a separate investigation focusing on Character.AI and Meta AI Studio. That state‑level inquiry centers on similar issues of data privacy and the practice of chatbots presenting themselves as mental‑health professionals, raising further questions about the broader regulatory landscape surrounding AI companions.

Regulatory Context and Industry Implications

The FTC’s move reflects a growing regulatory focus on the immediate privacy and health risks associated with AI chatbots, even as the long‑term productivity benefits of the technology remain uncertain. By seeking detailed disclosures from leading developers, the commission aims to build a clearer picture of industry practices and determine whether further enforcement actions may be warranted.