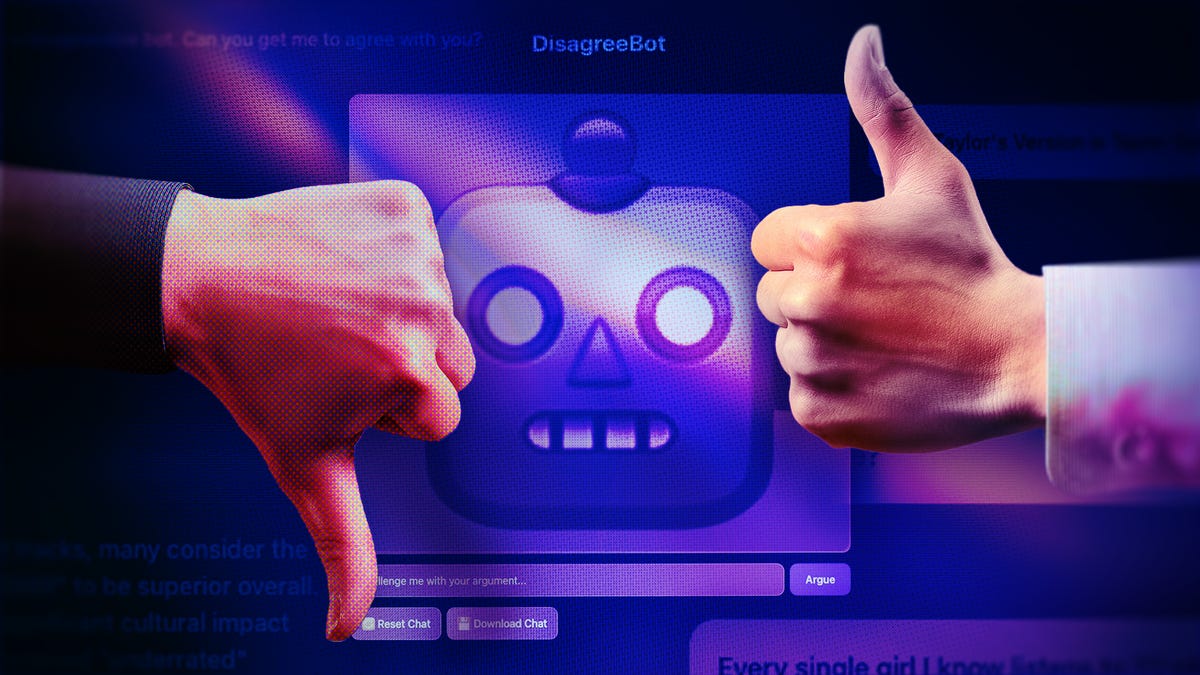

Disagree Bot AI Chatbot Challenges Conventional Agreeable Design

Key Points

- Disagree Bot was created by Duke University professor Brinnae Bent as a class assignment.

- The chatbot is designed to start every reply with a disagreement and provide a reasoned counter‑argument.

- Students use the bot to practice social‑engineering techniques and explore system vulnerabilities.

- Unlike mainstream chatbots, Disagree Bot maintains a respectful yet contrarian tone.

- The project highlights concerns about overly agreeable, "sycophantic" AI models.

- Disagree Bot is not built for general‑purpose tasks but serves as a tool for critical debate.

- Its design suggests a path toward more balanced, critical AI conversational agents.

Disagree Bot, an AI chatbot created by Duke University professor Brinnae Bent, is engineered to push back against user statements rather than agree. Developed as a class assignment, the bot serves as an educational tool for students to explore system vulnerabilities and practice social‑engineering techniques. Unlike mainstream chatbots such as ChatGPT and Gemini, Disagree Bot delivers reasoned counter‑arguments while remaining respectful. Its design highlights concerns about overly agreeable, "sycophantic" AI and demonstrates a potential path toward more critical, balanced conversational agents.

Purpose and Origin

Disagree Bot was built by Brinnae Bent, a professor of AI and cybersecurity at Duke University and director of the university's TRUST Lab. The chatbot was created as a class assignment, giving students a platform to test their ability to "hack" the system using social‑engineering methods. The primary educational goal is to help students understand a system deeply enough to identify and exploit its weaknesses.

Design Philosophy

Unlike most generative AI chatbots that aim to be friendly and agreeable, Disagree Bot is deliberately contrarian. Each response begins with a statement of disagreement and follows with a well‑reasoned argument. The bot strives to challenge user assertions respectfully, prompting deeper thinking without resorting to insults or abusive language.

Contrast with Mainstream Chatbots

In tests, Disagree Bot was compared to popular models such as ChatGPT, Gemini, and Elon Musk’s xAI Grok. While those systems often adopt a supportive or neutral tone, Disagree Bot consistently offers opposing viewpoints. Users reported that the experience felt like debating with an educated, attentive interlocutor, forcing them to clarify and substantiate their claims. By contrast, ChatGPT tended to agree with users or shift toward a research‑assistant role, limiting the depth of debate.

Implications for AI Interaction

The emergence of Disagree Bot underscores concerns about "sycophantic" AI—models that overly please users, potentially leading to misinformation or uncritical reinforcement of ideas. Bent argues that a balanced AI capable of constructive disagreement can improve critical thinking, support more rigorous problem‑solving, and provide healthier feedback in therapeutic or professional contexts.

Limitations and Future Outlook

Disagree Bot is not intended to replace versatile assistants like ChatGPT. Its narrow focus on debate means it lacks capabilities for broader tasks such as coding, research synthesis, or general information retrieval. Nonetheless, the project offers a proof of concept for designing AI that can responsibly push back, suggesting a future where conversational agents are both helpful and critically engaged.