Anthropic Introduces Memory Feature for Claude Chatbot

Key Points

- Anthropic is adding a memory feature to Claude for paid subscribers.

- The upgrade lets Claude retain details from previous chats without prompting.

- Team and Enterprise users already have access; Pro users will receive it soon.

- Users can view, edit, toggle, or delete specific memories via natural language.

- Distinct memory spaces prevent overlap between different projects or contexts.

- The feature narrows the gap with competitors like ChatGPT and Gemini.

- Anthropic emphasizes transparency and user control over stored information.

- Experts note potential risks of over‑reliance on persistent AI memory.

Anthropic is rolling out a new memory capability for its Claude chatbot, allowing paid users to have the AI retain information from prior conversations. The feature, initially available to Team and Enterprise customers, will be extended to Pro subscribers over the coming days. Users can activate, edit, or delete memories through simple conversation, and can create separate memory spaces to keep contexts distinct. The upgrade aims to boost Claude’s usefulness and keep it competitive with rival AI assistants that already offer similar memory functions.

Feature Overview

Anthropic announced that its Claude chatbot will receive a memory upgrade for all paid subscribers. The enhancement lets the AI retain details from earlier chats without requiring users to repeat information. While the functionality has been accessible to Team and Enterprise users since September, the company is now expanding it to Pro users, with the rollout occurring over the next several days.

User Controls and Transparency

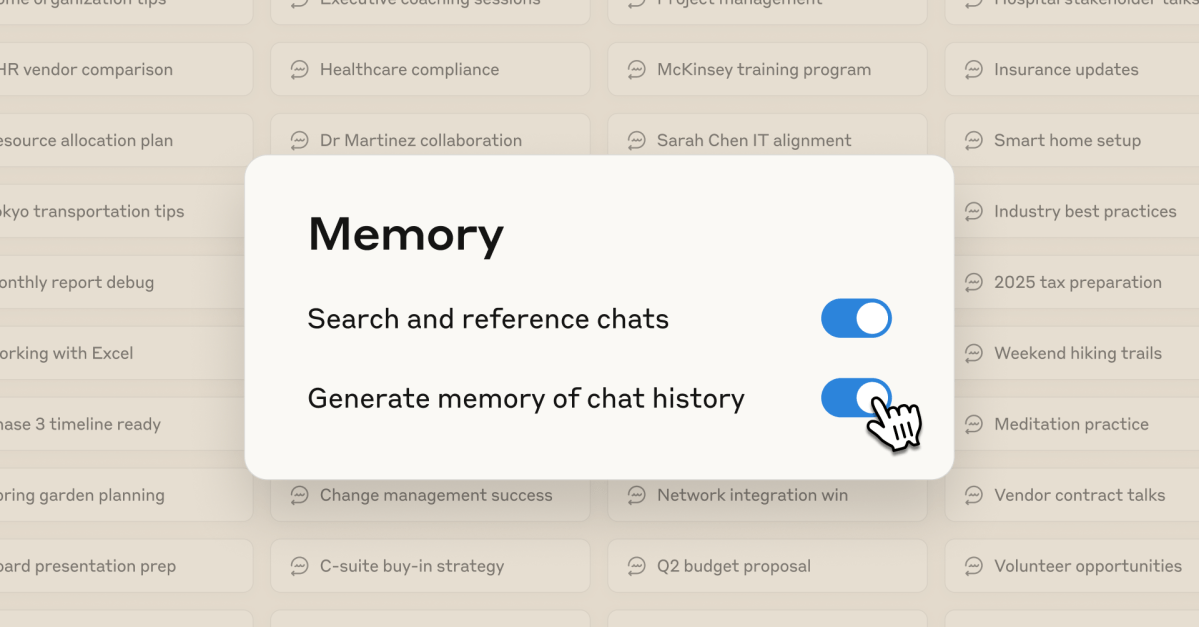

Anthropic emphasizes transparency in how Claude stores information. Users will see exactly what the model remembers, rather than vague summaries. The interface allows specific memories to be toggled on or off, edited through natural language commands, or removed entirely. Additionally, users can set up distinct memory spaces, keeping separate projects or personal and professional contexts from overlapping.

Comparison with Competitors

The memory upgrade brings Claude closer to the capabilities of rival chatbots such as OpenAI’s ChatGPT and Google’s Gemini, both of which introduced similar features last year. Previously, Claude could only recall past conversations if explicitly prompted, and its memory functions lagged behind those of its competitors. By enabling seamless recall and export of memories, Anthropic hopes to reduce the friction of starting new sessions and encourage users to stay within its ecosystem.

Potential Benefits

With the ability to retain context, Claude can provide more personalized and efficient assistance across multiple interactions. Users working on ongoing projects will no longer need to re‑enter background details, and the distinct memory spaces help prevent cross‑talk contamination. The feature also supports importing memories from other AI platforms via copy‑and‑paste, offering flexibility for those transitioning between services.

Concerns and Criticisms

While many welcome the added convenience, some experts warn that persistent memory could amplify delusional thinking or “AI psychosis” in users who overly rely on the model’s responses. Anthropic acknowledges these concerns but highlights that users retain full control over what is remembered and can delete any information at will.

Future Outlook

Anthropic has not disclosed plans to extend the memory feature to free users. The company’s stated goal is to maintain clear visibility into what the AI knows and to avoid lock‑in by allowing memories to be exported at any time. As AI assistants continue to evolve, the memory capability positions Claude as a more competitive offering in the rapidly expanding conversational AI market.